Deep learning is a machine learning technique based on learning data representations, which is similar to how the human brain processes information. The sensory inputs of a human, such as visual, auditory, olfactory (smell), taste, touch, position, heat, etc., feed into a myriad of interconnected neurons that produce perception, recognition and knowledge, i.e. intelligence. In machines, deep learning contributes to the development of artificial intelligence.

Deep learning and artificial intelligence (AI) connect input – such as images, measurements, colors, shapes, spectral information – with known features – such as identification of people, animals, cars, roadblocks, partially hidden objects, and other complex situational information. With these methods machines can even “learn” to discern emotion from facial expressions. However, the calibration process needs very large amounts of data to work well. For example, in order to recognize a person in every situation, a machine needs to have recorded, or “seen,” an individual display many different facial expressions in different orientations, with different lighting conditions, wearing different clothing, hats, etc.

The same is true for recognizing spectral and chemical information; a large amount of qualified data has to be collected and processed. Hyperspectral imaging is one of the largest sources of data, producing gigabytes of information-rich hypercubes of data within seconds and including both spatial and spectral measurements. Deep learning methods can assist in sorting and searching through this vast amount of data, which is a great way to streamline the interpretation of hyperspectral data and make the most of this incredible technology!

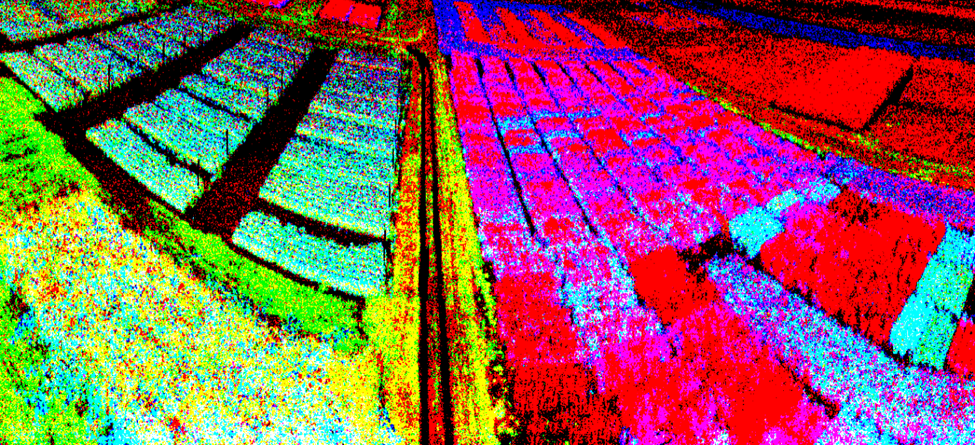

An image of an experimental corn and soybean field from a VNIR hyperspectral camera.

An image of an experimental corn and soybean field from a VNIR hyperspectral camera.